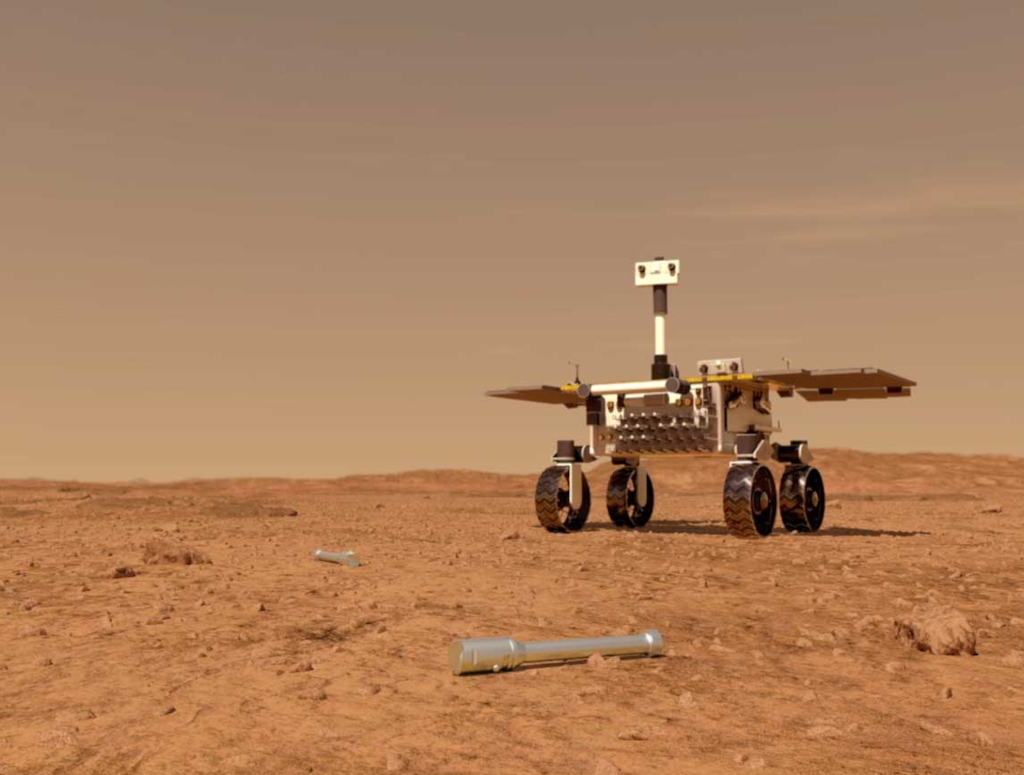

Our technology growth has taken us on a fantastic journey. Inside of the last 25 years we have gone from wired phones in our houses, to powerful computers strapped to our wrists. We can transfer money across to the other side of the world in fractions of a second. Whole autonomous organisations can be contructed from smart contracts that live distributed across the digital world. I can authenticate to my bank through facial recognition. My favourite websites know me better than I know myself, and can act as smart agents, predicting what I would like, where I would want to go, how I might vote…

This digital world is one of data and machines, locations, behaviours, machine learning predictions. automated interactions. These abilities give us the power to do great things, but in doing so we have also achieved the power to do immense damage.

How do we navigate this new world and maintain integrity? How do we cross ever-more amazing frontiers without losing our ethical direction?

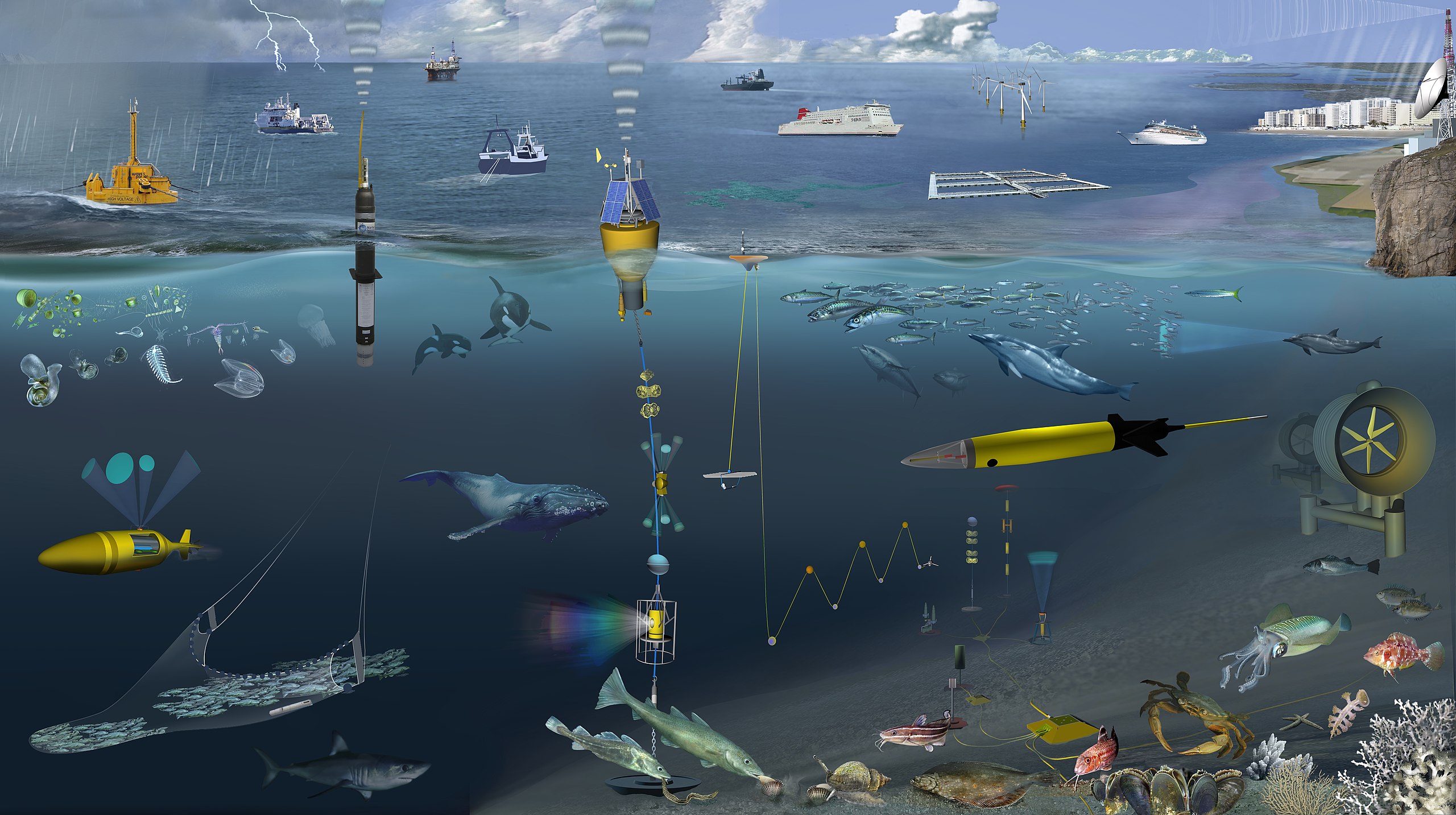

Mapping a course in a sea of choices

A seismic shift has taken place in society and businesses driven by the rapid expansion of the internet into all corners of our lives. As this growth has progressed, and the cost of storage and compute has dropped, it has been accompanied by an exponential growth in data generation. Wherever we make contact with the digital world we create an imprint. The clicks on a webpage, the online shopping items we buy, which music we stream, the streaming video channels we watch.

As the wealth of data has grown, so has the ability to shape online experiences to our own personal tastes. The behavioural models behind our favourite websites can ensure we don’t miss out on upcoming TV shows that we might like. In a shopping store of millions of items they can craft a custom window onto the store that makes the things we actually care about visible to us, instead of disapearing into the background noise of products.

It is this ability to individualise the web to peoples own interests and preferences that has allowed the internet, and the businesses that exist there, to scale successfully. Without the AI models backing online search, it would be extremly difficult to find what we are looking for in the billions of websites. Product recommendations focus customers on items that they are interested in, seperating these items out from the millions that they might have to browse through.

Troubled waters

“Life is what happens when you’re busy making other plans.”

John Lennon

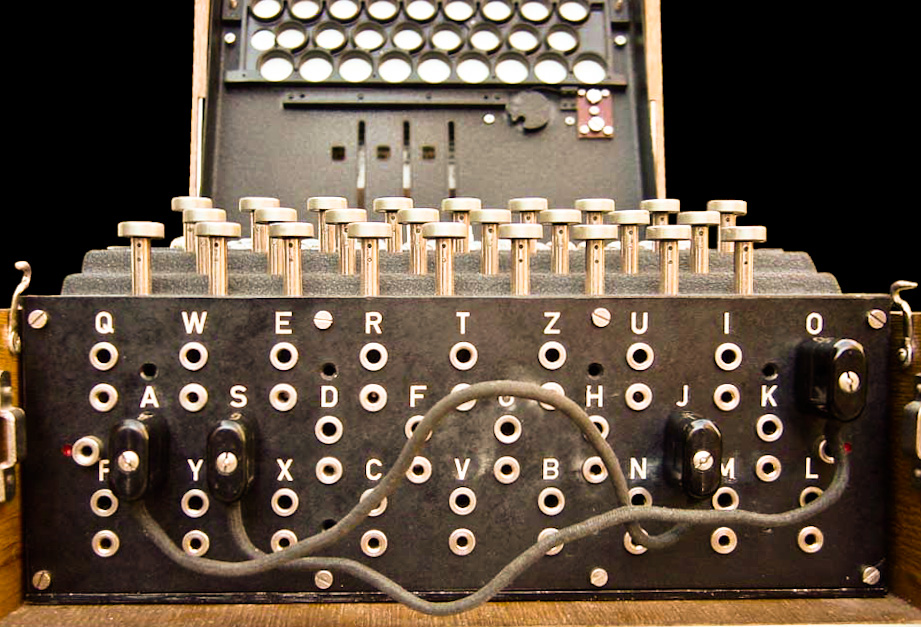

As organisations know more about customers, this greatly improves the user experience, but comes with a great deal of responsibility on how that data is used and how it is protected. Security of user data is, and has always been, the key requirement for any business. People entrust us with passwords, that realistically are used on multiple sites, credit card details, personal addresses and so on. The exposure of these has a real-life impact on individuals as with any theft and can cost them both financially and in emotional stresses. As AI modelling has increased in use, this has added an additional, if more subtle misuse of personal data. This data might not relate directly to individuals but the impact can certainly be just as individual as personal data loss. Recent examples can be seen in everyhing from the inadvertent revelations of something someone doesn’t want surfaced, such as their product choices leading models to correctly predict a pregnancy, with a significant emotional damage, to massive scaled behavioural model attacks such as influencing the elections of a country itself, and the opinions and intentions of its residents.

The unexpected dangers of passive data

“I’m playing all the right notes, but not necessarily in the right order.”

Eric Morecambe

With such high stakes in the use of peoples’ data and modelling human behaviour, we can become conservative in how we mitigate these problems. Unfortunately, taking a passive, neutral stance can leave substantial problems that expand unchecked. A common example lies in the biases that exist in many large datasets. For example, by simply passively collecting image data and using this to generate facial recognition software, racial and gender biases can result. With facial recognition being used in situations from phone authentication to law enforcement in some countries, the impact of this bias is truly unacceptable. Often these datasets may also be being reused multiple times in both academia and commercial settings. Increasing integration with these types of technologies in our lives, and the resulting large impact they can have on idividuals and society at large has sharpened the focus on actively working to improve modelling through targeted data collection and constant monitoring for bias problems.

In short, we need to actively plan for ethical behavioural data use otherwise we can sleepwalk into dangerous applications. Those that own data and behavioural models ‘own wrongness’, they take on that responsibility for the implications of data quality and sampling bias that surfaces in unfair algorithms being brought into use.

An ethical compass in a digital world

“The biggest challenge, I think, is always maintaining your moral compass.”

Barack Obama

So, given the challenge in keeping our use of technologies purely positive, how do we guide ourselves through the rocks when choosing how we use them? Is it enough to set our moral compass purely by thinking about what customers want? This would seem to assume that we are arbiters of the choices of others. In addition, as the power and complexity of modelling has increased, and we add in the subtle effects of combining massive amounts of seemingly unrelated metadata, it can require a deep level of knowledge of the effects of this modelling to be able to understand the questions we are making a decision on.

Then what are the pillars of good choices? There are some obvious, and perhaps less obvious ways, that we can act to help ourselves make good decisions with behavioural prediction, and protect against drifting into accidental misuse of that responsibility.

Making good choices

“The worst thing you can do is nothing.”

Terry Pratchett

Is this prediction accurate?

The most basic of questions can sometimes be overlooked. Before considering other aspects of modelling and user experience, we first need to ask whether the data we’re relying on is correct in the first instance. A mispelled name can mean that peoples details cannot be found, or stale data giving inaccurate predictions. Those who have ownership of data own the responsibility of both ensuring its accuracy, but also in having mitigations in place for when it isnt.

Is this prediction ethical?

Being able to make accurate decisions may be the first point that is the focus of modelling, however, it can dominate the conversation. Making a correct prediction on someones behavioural preferences loses all meaning when that prediction in itself is damaging or embarrassing for the people concerned. Research shows that models can predict sexuality, ethnicity, religious, and political view accurately from often seemingly uncorrelated data available online and in social media. As a general rule, if modelling provides results that seem overly personal, or would be damaging if public, this is a clear indication of unethical use and should be avoided at all costs.

Making public choices

While there are benefits reputationally and in forming a relationship with broader society in making in public decision making, this commitment also drives key behaviours internally amongst both indivuduals and to the organisation as a whole. Firstly, the openness in both what choices we make, and also on how we justified these decisions to ourselves drives us to question deeply our motivations and trade-offs, helping us avoid missing key details or impacts, and ensuring we don’t sweep the difficult moral elements under the carpet. Secondly, it forms a strong social contract. By pulicicing our decions and moral judgements we impicitly strengthen our resolve to stick by them.

Transparency and collaborative ethics

By making our decisions in public we also help avoid the competitive nature of market forces acting detrimentally on our ethical influences. By acting transparently we encourage and challenge peers to do so too, pushing back against the public goods dilemma, the pressure on organisations to lower their ethical standards to gain a competitive advantage, and instigate a race to the bottom.

Scaled responsibility

The defering of the ethical responsibility is common within large organisations, passing the impacts of our choices to higher and higher levels, or between teams, where context, ownership, and human contact with the outcomes of the decisions are lost or diluted. Making good choices cannot live purely in defined processes and in ethical review boards, it must live in the places where decisions are truly made. By individuals and small groups, every day. The role of the organisation is both to provide the broad ethical guidelines that are needed for legal requirements, and as the inherent principles that identify their culture, but additionally to empower the individuals within their organisation to assume responsibility for chocies within those broad guidelines, to support them when challenged, and to expect them to challenge the organisations ethical stances without fear or fallout.

In conclusion

“Any tool can be used for good or bad. It’s really the ethics of the artist using it.”

John Knoll

Digital technologies have truly changed society and our individual lives. The power to do so will only increase as the development of machine learning and quantum abilities increase. Much like the world of medicine, when we realised as a society how much we could now do, we also became aware of the moral risks and choices we were faced with, that the combination of the ambiguous nature of many real-world decisions, combined with an ever large impact of those choices, meant that we had do collaboratively establish ethical guide lights to help find the right path forward.

References

[2] Facebook - Cambridge Analytica data scandal

[1] Effect of Cambridge Analytica’s Facebook ads on the 2016 US Presidential Election

[1] What are the biases in my word embedding?

[1] Racial Discrimination in Face Recognition Technology

[1] What Do We Do About the Biases in AI?

[1] Private traits and attributes are predictable from digital records of human behavior