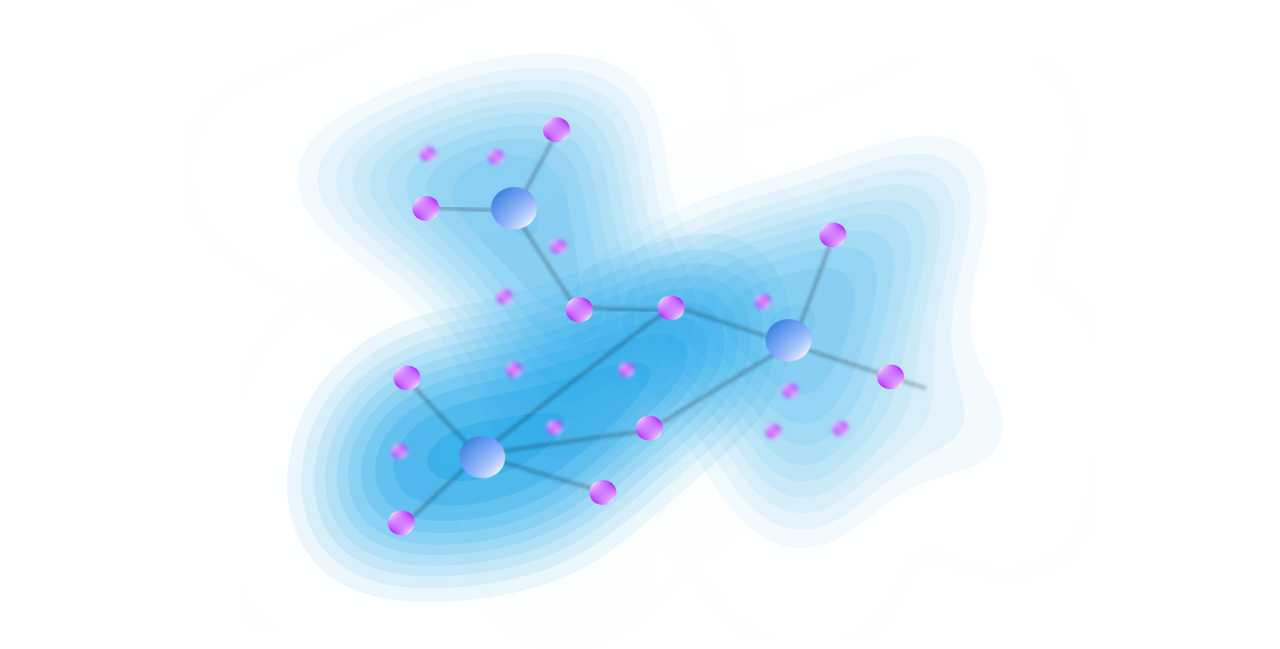

This is the introductory poster for the Multi-agent learning in dynamic systems series. In systems with a large number of agents there are fundamental pressures on the centralised coordination techniques used to provide inter-communication, task orchestration, and routing of messages. As the scale of interacting components expands, we reach resource constraint plateaus, where computation, storage, or communication pathways become saturated. At these points we must decompose each agents functionality into a number of specialisms that can then be taken up by other agents, at the cost of even more orchestration communications and synchronisation to provide this distributed functionality. To provide solutions to tackle these issues we focus on distributed agent systems where reinforcement learning behaviours are constrained by resource usage limits and hence by local neighbourhood awareness rather than global system knowledge.

Multi-agent learning in dynamic systems Focused on applying reinforcement learning techniques to multi-agent systems where the environment is dynamic, and realistic resource constraints exist. This work combines task-allocation optimisation, resource allocation, and self-organising hierarchical agent structures.

In this work we develop algorithms for autonomous intelligent agents within a distributed multi-agent system that enable agents to learn and repeatedly adapt a subset of state-action space while also exploiting it to achieve a goal.Through the investigation of these systems we also provide some insight and define concepts that illustrate the behaviours of agents under these conditions that prove useful to build further contributions, including the use of constrained local neighbourhoods as units of scalability in large distributed systems.